Thin Lens Camera

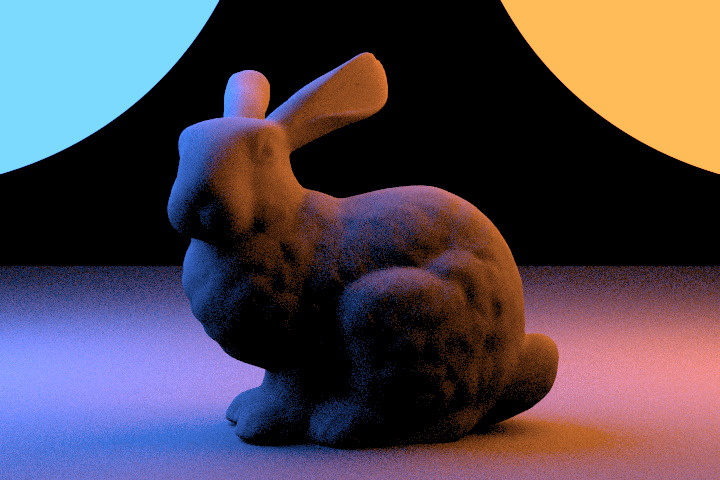

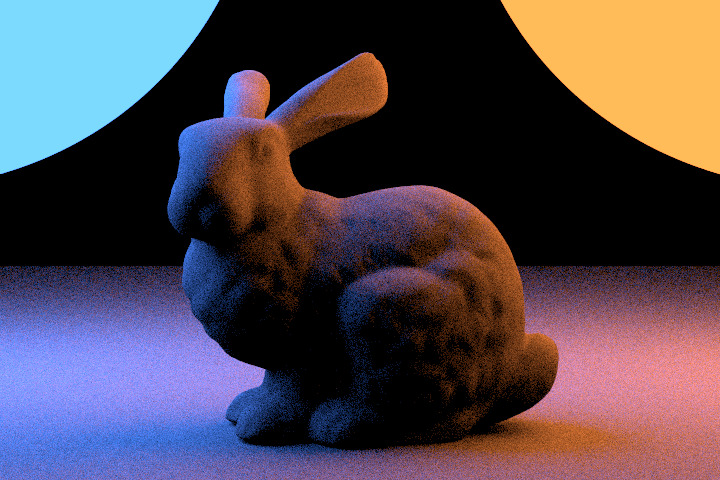

The thin lens camera is one of the simplest camera models. In contrast to the pinhole model, it can already simulate

depth of field effects, adding much realism to a scene.

One of the challenges with camera models is that making them even more realistic requires an exponential amount of effort.

We tried to implement the realistic camera model from PBRT,

but we had to give up on it because of the time constraints of the course.

The thin-lens camera is a good compromise between realism and effort.